SPRING 22 PROJECTS

Watch the Spring 2022 Showcase

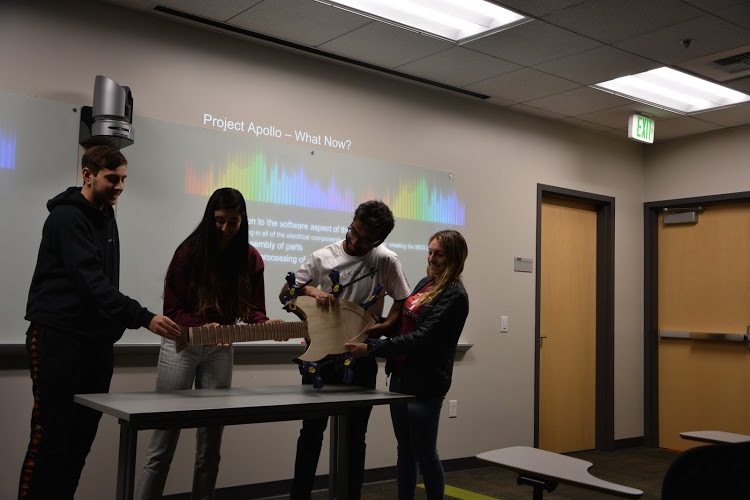

Chord Hero

The main goal of the project was to create a product able to predict and display the chords that accompany a song. To do this, a user selects a song from YouTube and inputs the link for our software program. From there, we use signal processing techniques to find the chords and beat of the music. Once that is done, the song is played and the chords are displayed with an LED matrix in the style of “Guitar Hero,” where the user can play along to the selected song on a real keyboard.

Compost-O-Matic

Composting is a method of recycling food scraps, yard trimmings, and plant waste into nutrient rich soil through controlled decomposition. This has the benefit of diverting trash from landfills, sequestering methane, and building soil health. However, composting waste requires very specific temperature and moisture conditions. Compost-O-Matic is an Internet-connected compost monitoring system that uses smart sensing and analytics to take the guesswork out of maintaining a compost pile. The system takes automated temperature and moisture readings from a compost bin and shares them with the user by a web interface to help inform management of the compost pile.

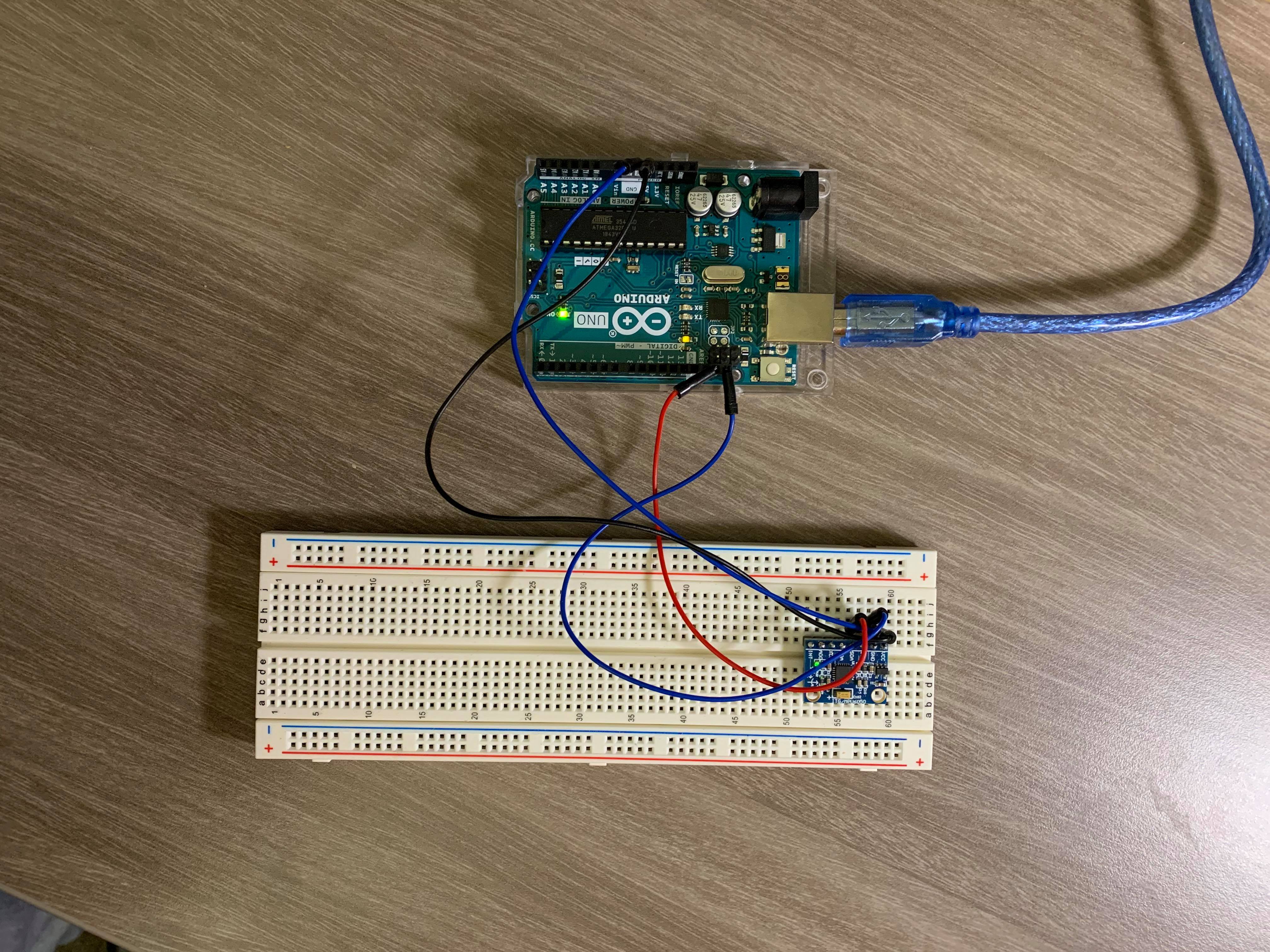

Crani-Arm

This project is inspired by the interdisciplinary fields of biology and engineering by harnessing the electrical impulses of the body to control a robotic arm. The signals are captured using several Surface Electromyography (sEMG) sensors that are placed on the forearm to capture the action potentials that occur when a user contracts their arm. Through the use of machine learning, our project interprets the data from the sEMG sensors into recognizable gestures that can be modeled using a robotic hand controlled by servo motors.

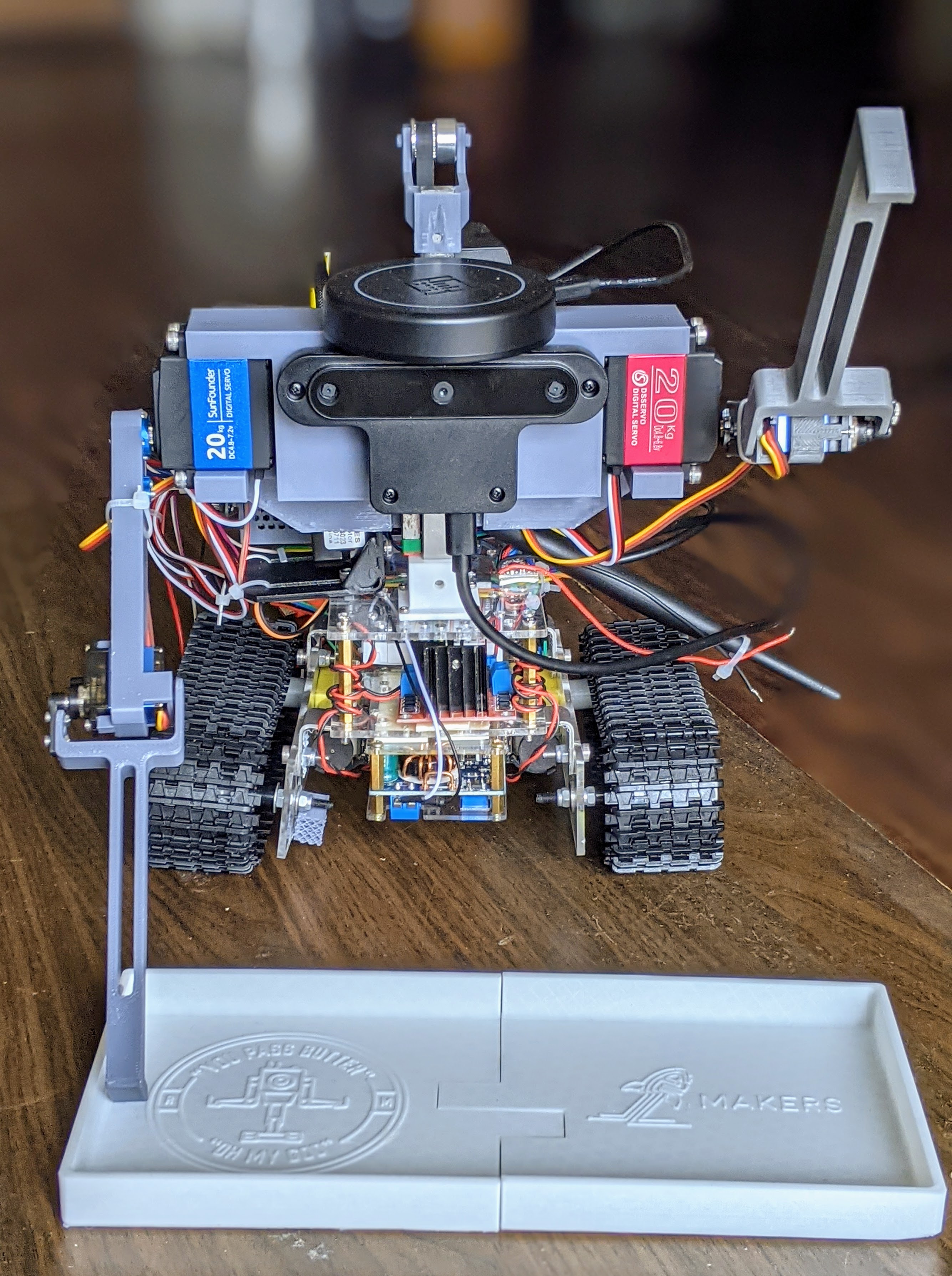

It's Coming Home

It’s Coming Home is a robot that can swing a leg and kick a soccer ball into a desired location in the goal from the penalty spot. The robot has a camera on it which takes a picture of the goal, and through some user interface, the user will be able to select the location at which the robot will shoot. Then, the robot will make the necessary mechanical adjustments, and will shoot the ball.

Kooka

Kooka is a cooking assistant robot arm. Currently, it is able to move a ladle in a stirring motion, which is useful for unclumping pasta while it is cooking. Potential future goals include enabling chopping fruits and vegetables as well as assembling ingredients.

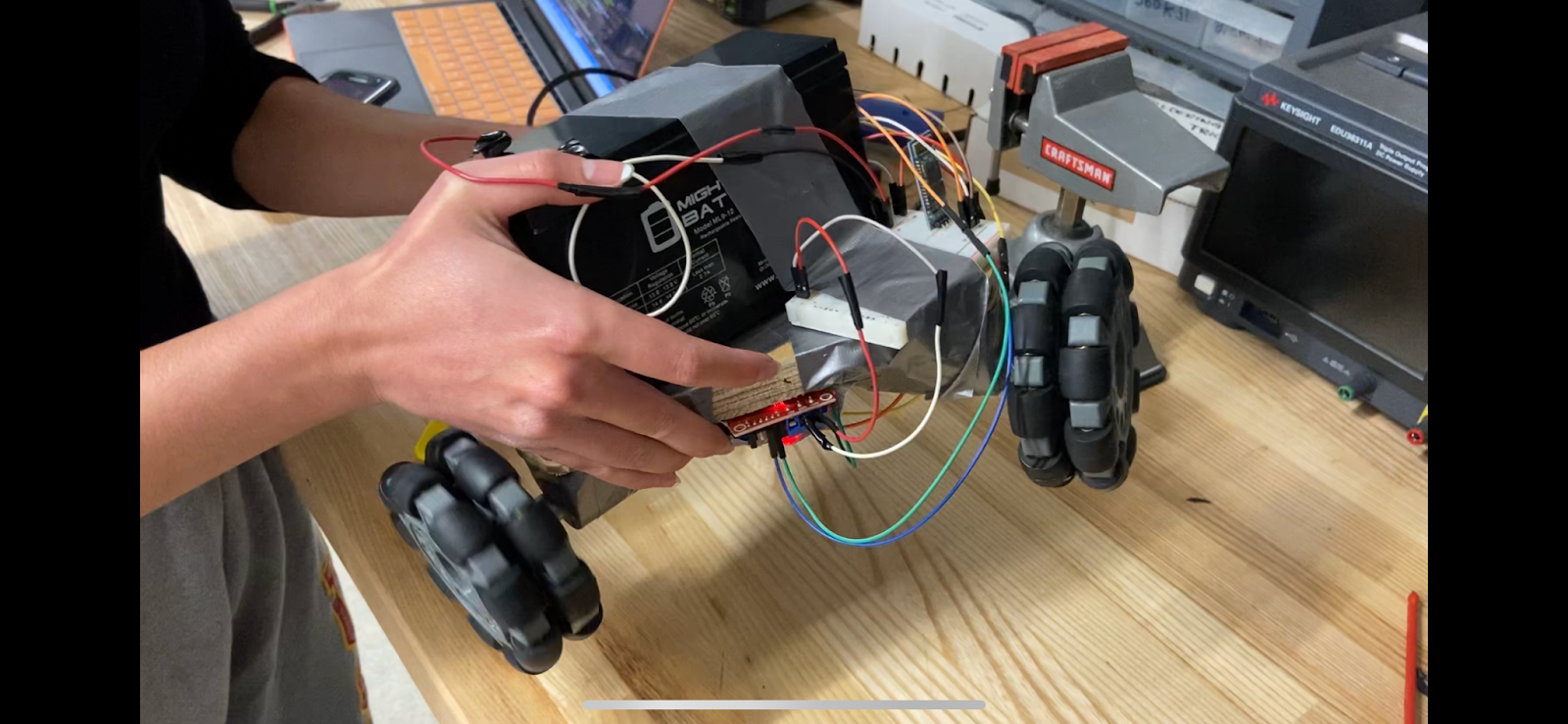

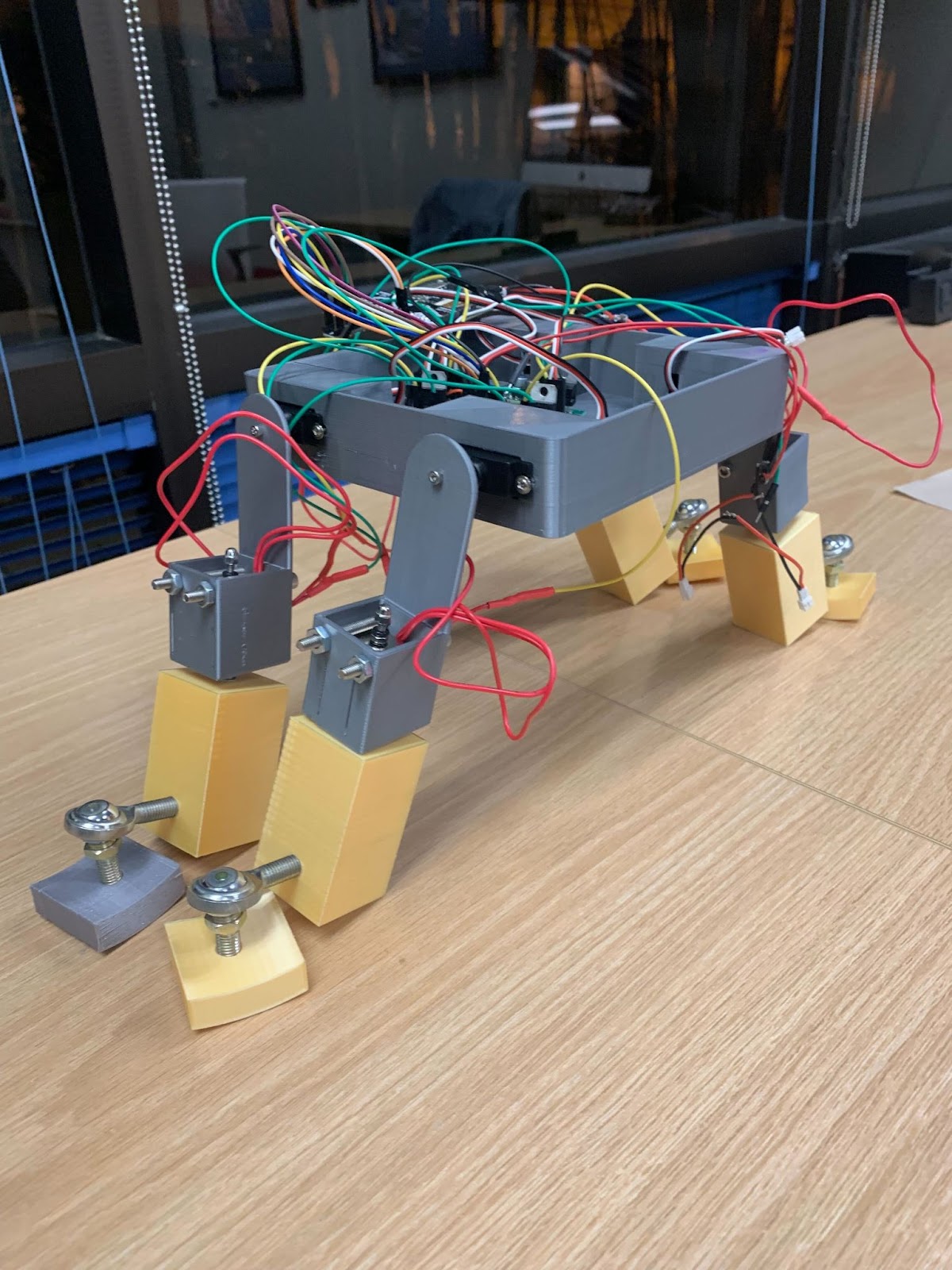

Mag Lynx

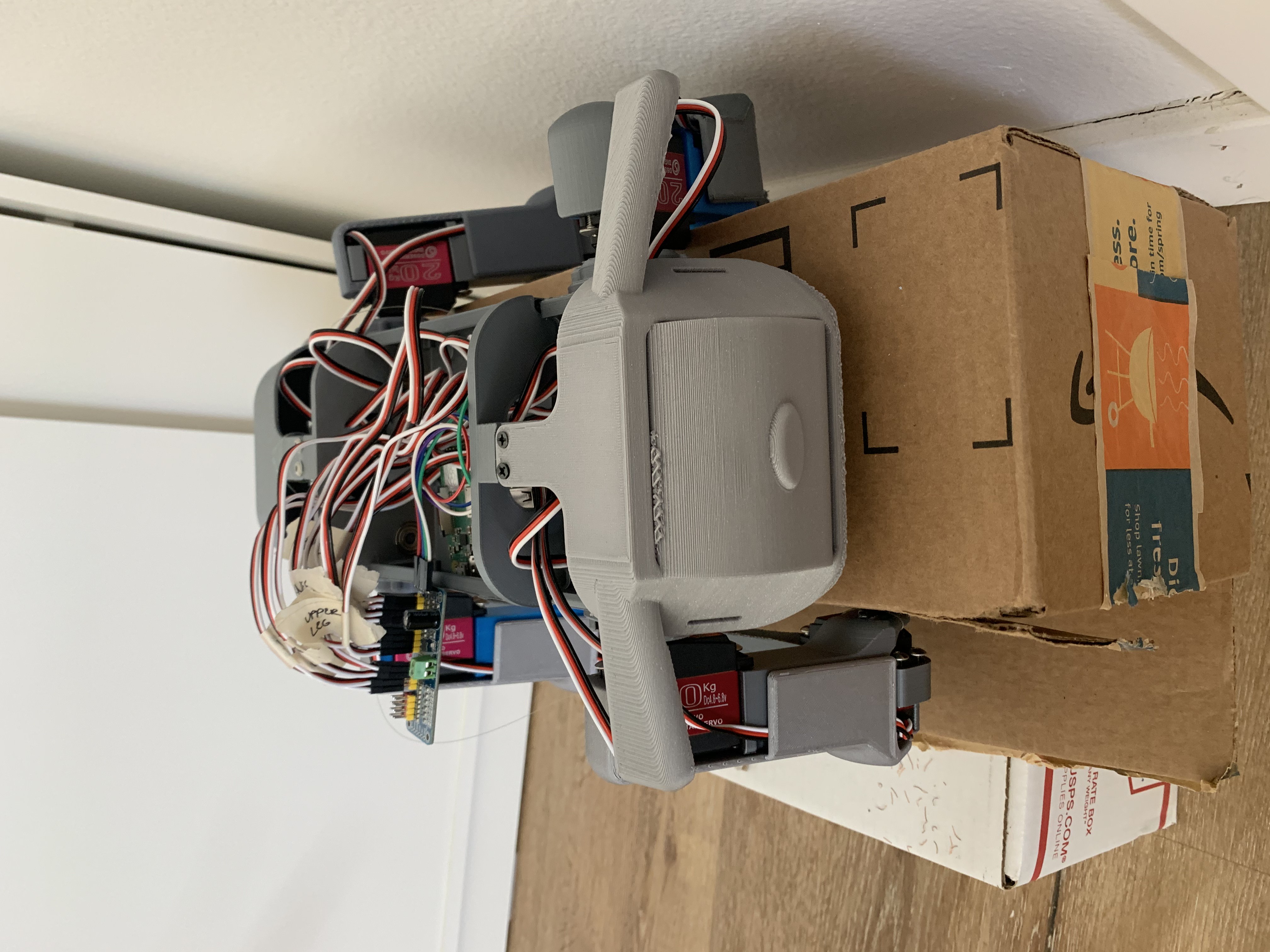

Our project is a 3D printed quadruped robot that uses solenoids and ball joints to walk while avoiding obstacles and staying upright. Rather than using advanced software, our goal was to make the robot capable of walking by utilizing the mechanical properties of our components. The solenoids lift the bottom of the robot’s legs up rather than rotating them, allowing it to avoid low obstacles on the ground. The ball joints are attached to the feet to prevent the robot from tipping when off balanced. The robot can be controlled from a phone app through a Bluetooth connection and allows a user to move it forwards and backwards.

Reverse Choreography

Inspired by STEEZY Studios’ “Reverse Choreography” series, this project captures user movement/dance and generates a Spotify playlist that fits the energy of the movement. The user first moves in front of a capture camera to a selected tempo. After analyzing movement data and prompting the user about general feeling, the playlist is automatically generated and queued on Spotify. During the process, visual effects also react off of movement and song audio once the playlist is generated.

Robot Rock

Robot Rock is an automated drumming robot. Robot Rock can lay down a predefined beat or improvise one with a snare, tom, kick drum, cymbal, and maracas. For visual flare, a llama-corn mascot sits atop the drum throne and shakes its head while LED strips flare to the beat.

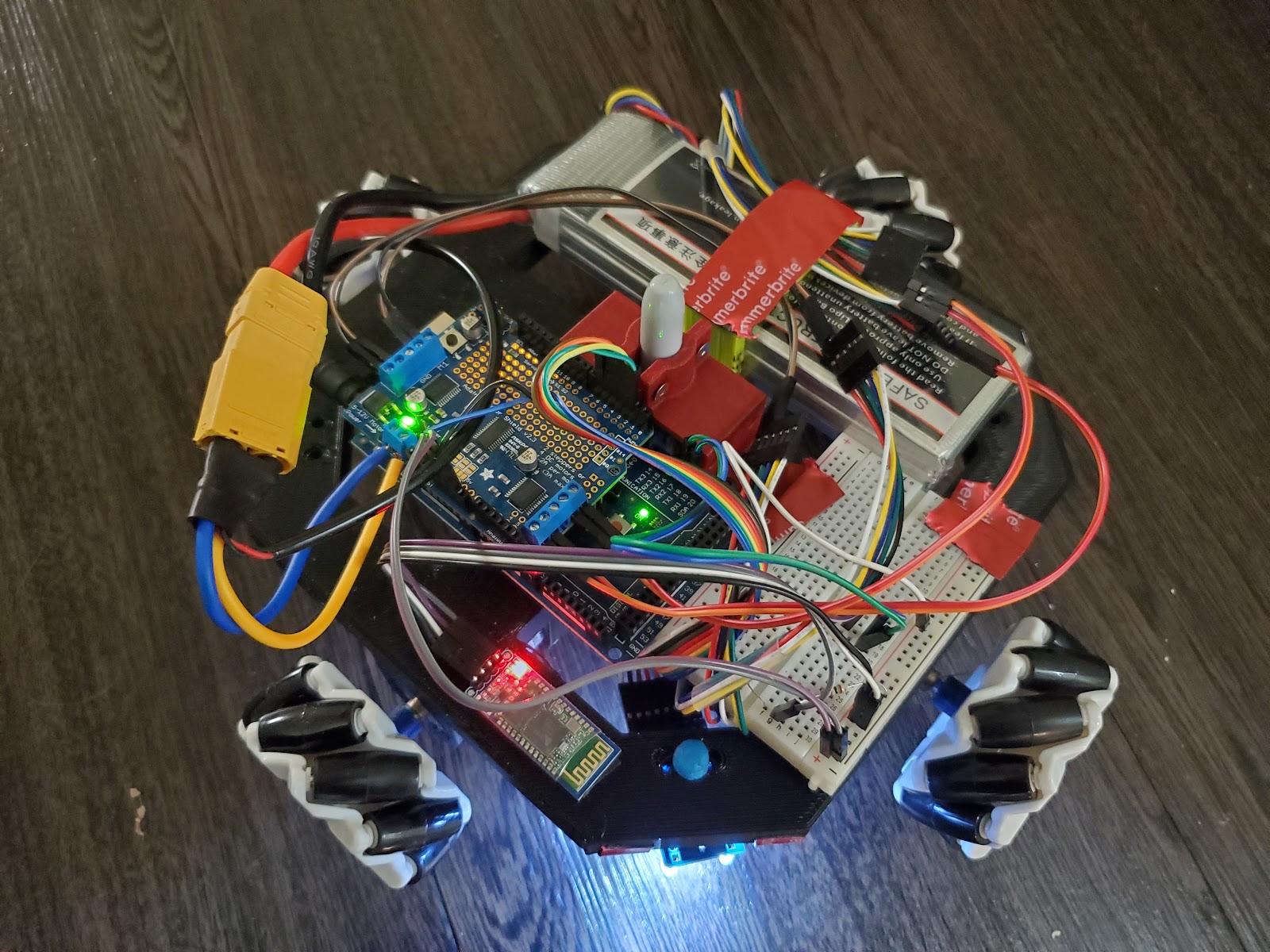

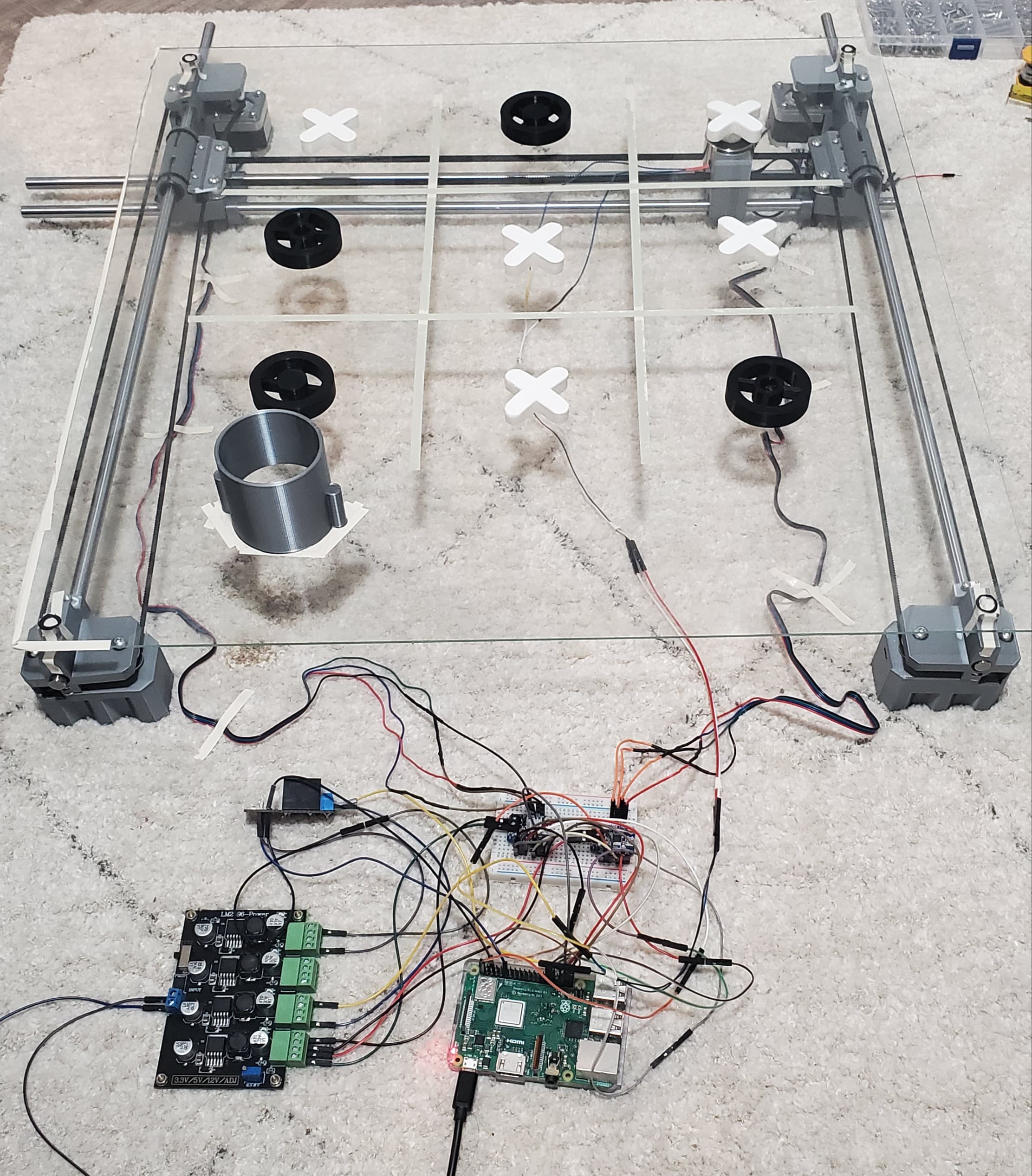

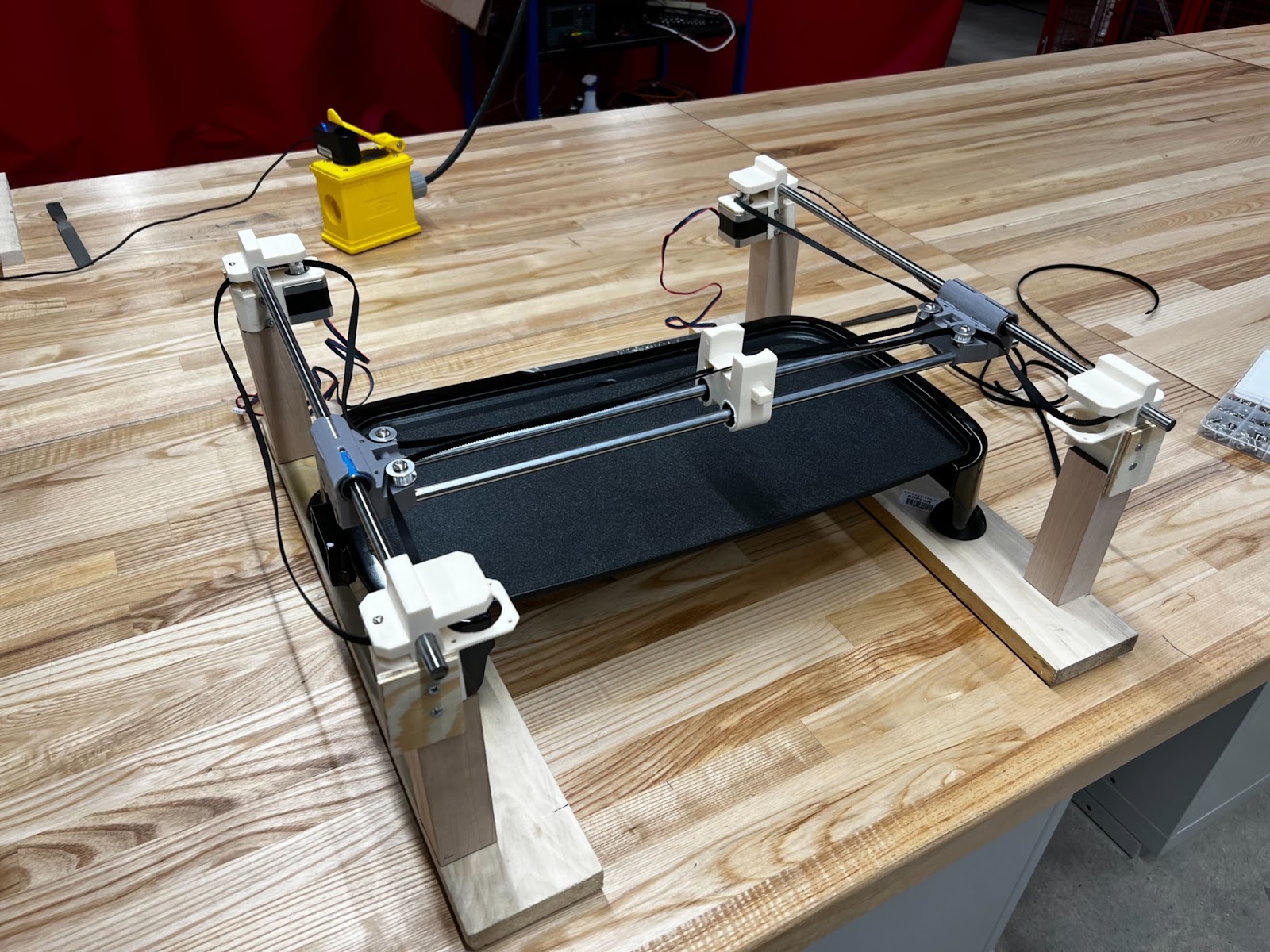

Stacks On Stacks

We designed a robot capable of printing custom pancake designs. Users can simply upload an SVG of an image or a hand-drawn design to our web interface, and watch the robot make a precise pancake to your desired shape.

HNC

Our main goal is to build Hyperactive Noise Canceling (HNC) headphones, our own version of Active Noise Canceling (ANC) headphones. In general, ANC headphones use microphones to analyze and digitally process noise outside the headphones, then play anti-noise to cancel out the background noise. At the most basic level, this involves figuring out the frequencies, amplitudes, and phases of the background noise. Our HNC technology can be implemented on any pair of headphones and will be able to selectively cancel out sine waves of different frequencies.

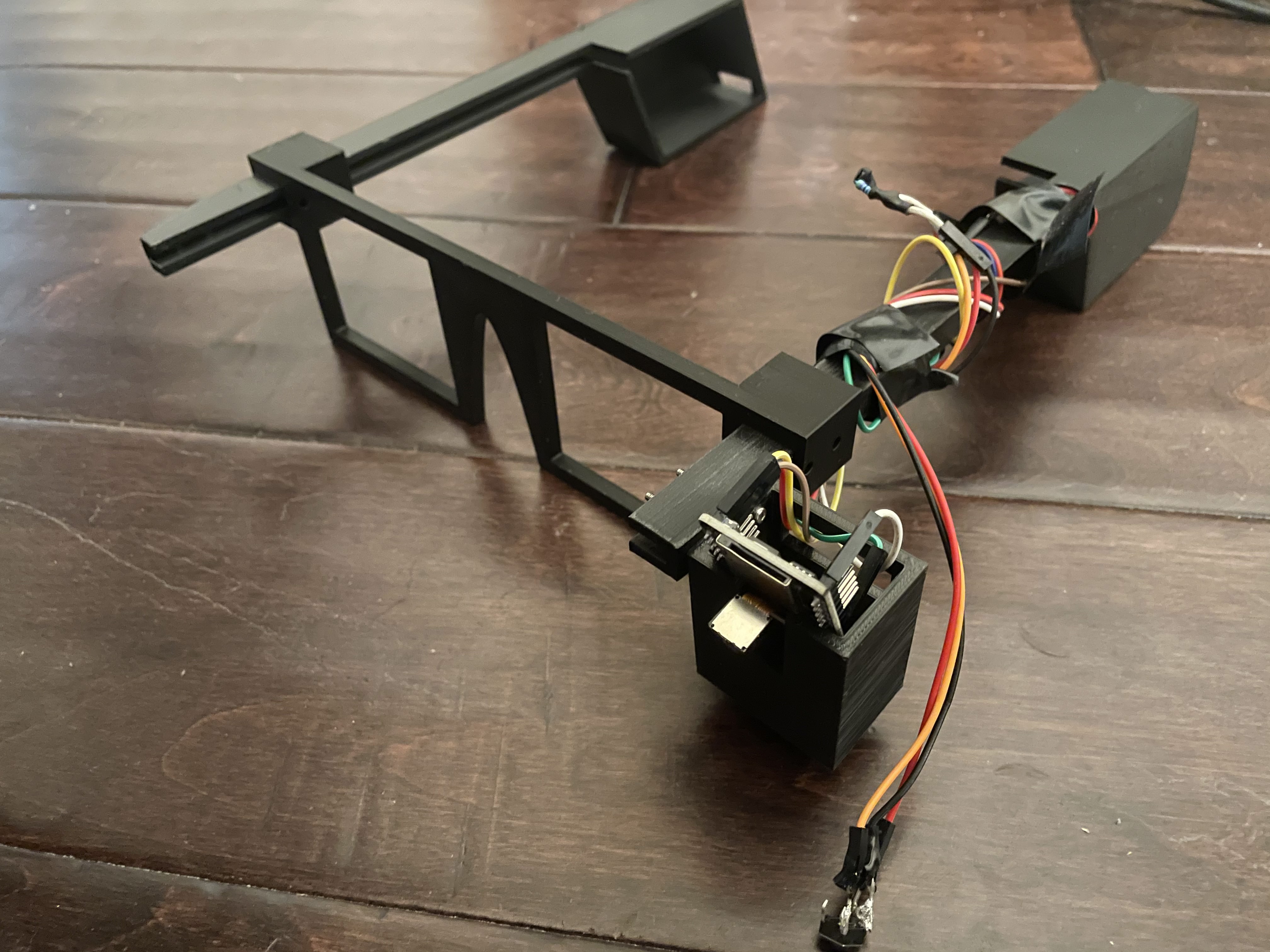

Safeboard

Our main goal this semester was to build a detachable skateboard safety module that amplifies not only the user’s spatial awareness, but also alerts others of the user’s movements and actions. Here at USC, skateboarding is very popular among students, with people riding throughout campus and on the streets of South Central LA. Almost everyone here knows of students that have gotten into skateboarding accidents, which can be very dangerous. Our system increases the safety of skateboarding by adding rear/blind spot detection and automatic turning blinkers to not only alert the user of their surroundings, but also alert cars and pedestrians surrounding them.